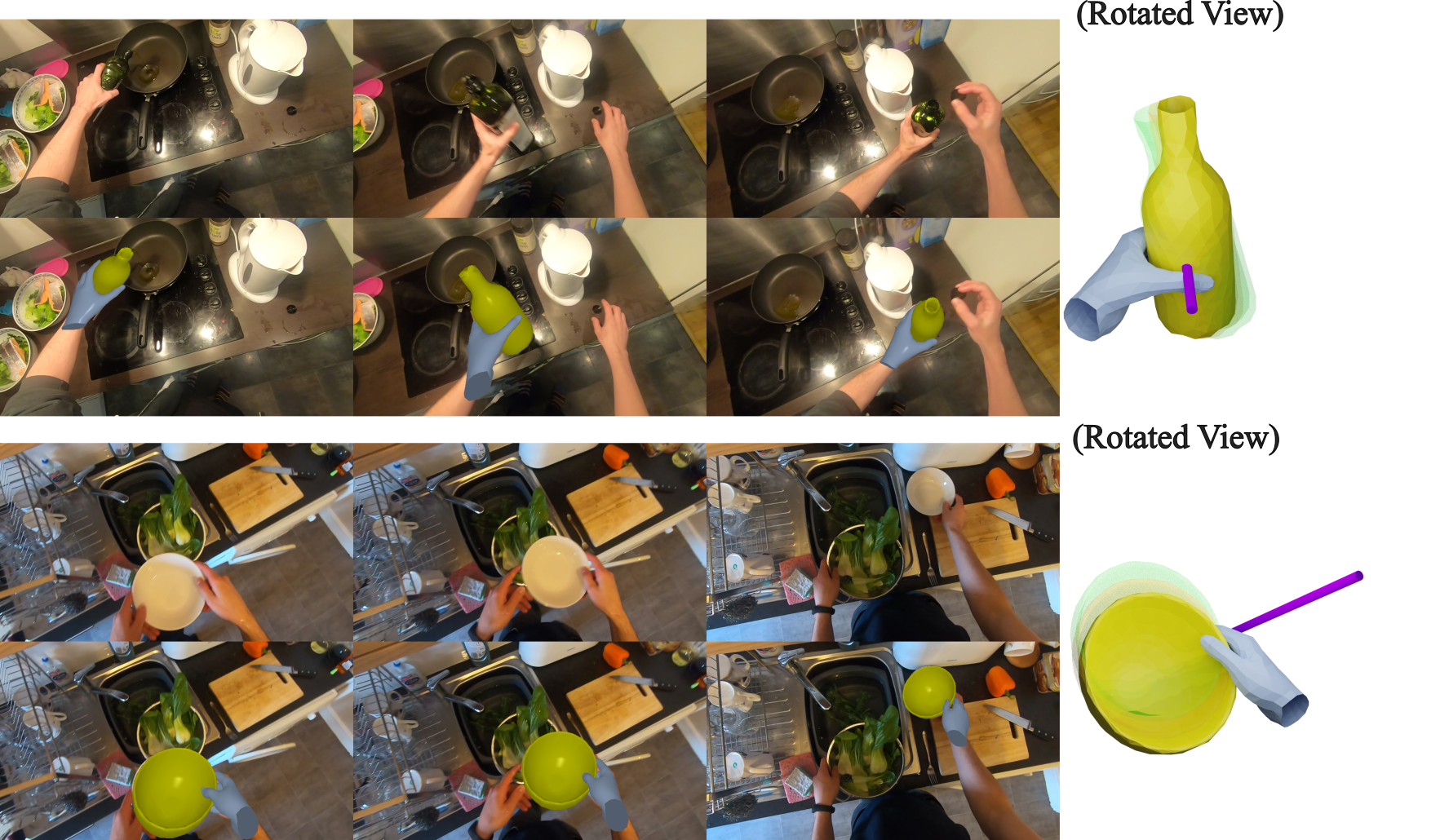

We propose the task of Hand-Object Stable Grasp Reconstruction (HO-SGR), the reconstruction of frames during which the hand is stably holding the object. We first develop the stable grasp definition based on the intuition that the in-contact area between the hand and object should remain stable. By analysing the 3D ARCTIC dataset, we identify stable grasp durations and showcase that objects in stable grasps move within a single degree of freedom (1-DoF). We thereby propose a method to jointly optimise all frames within a stable grasp, minimising object motions to a latent 1-DoF. Finally, we extend the knowledge to in-the-wild videos by labelling 2.4K clips of stable grasps. Our proposed EPIC-Grasps dataset includes 390 object instances of 9 categories, featuring stable grasps from videos of daily interactions in 141 environments. Without 3D ground truth, we use stable contact areas and 2D projection masks to assess the HO-SGR task in the wild. We evaluate relevant methods and our approach preserves significantly higher stable contact area, on both EPIC-Grasps and stable grasp sub-sequences from the ARCTIC dataset.

@misc{zhu2024grip,

title={Get a Grip: Reconstructing Hand-Object Stable Grasps in Egocentric Videos},

author={Zhifan Zhu and Dima Damen},

year={2024},

eprint={2312.15719},

archivePrefix={arXiv},

primaryClass={cs.CV}

}